Java 8, released in 2014, introduced lambdas and the possibility to write code in a functional style through streams of elements. C++11 standard was published in 2011, and it introduced lambdas too, which altogether with old and new functions in algorithm (e.g. accumulate(), for_each(), count(), transform()) allow for a functional style in C++.

According to this, Java streams are monads (my nightmare when trying to do things in Haskell).

I expect that most of the time a loop which can be translated easily into a map operation in functional style performs, in the worst case, the same as the functional counterpart.

Test boilerplate

import java.util.stream.*;

import java.util.ArrayList;

public class Test

{

static double ffact(double l) {

double f = l;

for (double a = 1.0; a < l; a++) {

f *= a;

}

return f;

}

public static void main(String[] args) {

// ...

long start = System.nanoTime();

// run

long end = System.nanoTime();

// (end - start) is the very approximated duration

// in ns.

}

}The function ffact() is not really important; consider it just a way to waste CPU time.

Primitive array

First, let's try with a primitive array filled with N numbers from 0 to N-1.

double[] n = new double[N];

// ...

for (i = 0; i < N; i++)

{

n[i] = ffact(n[i]);

}(Why double instead of long, considering that a factorial is an integer? Doubles are computationally more “intense”, and we want to waste time. Besides, doubles could give order of magnitude even when a long has already overflowed1.)

The average of ten simulated runs is 204 ms on my system. The array is modified in place, hence we don't use extra memory. Running it several time from the command line gives values comparable to the average of the ten simulated runs.

Streaming

The first difference is that primitive arrays aren't streamable. I've used an ArrayList as you can deduce from the test boilerplate code.

The second important difference is that you don't modify the array in place: the stream is immutable (standard thing for functional languages), so you can only apply the function to each element and collect the result into a collection, e.g. another ArrayList (there are several options, I've tried just this one).

This means that we have two arrays and so we need double the memory of a single array. Moreover, there's the overhead of the ArrayList objects and of the boxed doubles — because with ArrayList you can't use primitive types.

ArrayList<Double> res = m.stream()

.map(l -> ffact(l))

.collect(Collectors.toCollection(ArrayList::new));After the mapping we need another operation to collect the results into the chosen collection.

The average of ten simulated runs is 290 ms. These runs are done with a simple for loop. Before I added this loop to have the average of several runs, I've run it “by hand” several times, and the duration was higher, between 380 and 415 ms.

Running separately for 10 times, I obtain an average of 400 ms (397.6 approximated). So I suspect there's some kind of optimisation in the case when the same stream (which is immutable…) is used again and again with the same set of operations2.

Changing stream() into parallelStream()3 makes things better. Looping in the code to get the average of ten simulated runs gives an average of 208 ms. Running it ten times from the command line gives an average of 342 ms.

Unfair?

It's unfair because we have boxed the double. Let's do the same but with an array of boxed doubles:

Double[] n = new Double[N];The average of ten simulated runs is 232 ms. Slower, but still faster of the non parallel case. Still unfair though, because we have a primitive array of objects.

Let's use an ArrayList also for the regular loop and boxed doubles everywhere, starting from the ffact() function:

static Double ffact(Double l) {

Double f = l;

for (Double a = 1.0; a < l; a++) {

f *= a;

}

return f;

}Then,

ArrayList<Double> n = new ArrayList<>();

// ...

for (i = 0; i < N; i++)

{

n.set(i, ffact(n.get(i)));

} A single run lasted beyond 1000 ms. It becomes a lot better if we avoid Double in the ffact() function. In this case the average of ten simulated runs was 248 ms; of ten “real” runs was 218 ms.

The cost of using a boxed primitive value is high. Using ffact() with Double, a single run of the parallel stream version took more than 1000 ms too.

So, let's always use double ffact(double) in both regular loop and stream version.

Immutability

Instead of modifying the elements in place, let's put them into a new ArrayList.

ArrayList<Double> n = new ArrayList<>();

ArrayList<Double> d = new ArrayList<>();

// ...

for (i = 0; i < MAXLOOP; i++)

{

d.add(ffact(n.get(i)));

}Ten simulated runs gave an average of 205 ms per run4.

Source of stream

Of course we don't need to have an ArrayList of values from 0 up to a maximum value. Java has also ways to give sequences of numbers, e.g. IntStream.

In our case the ArrayList is just a container for values from 0 to MAXLOOP-1.

ArrayList<Double> res = IntStream.range(0,MAXLOOP-1)

//.parallel()

.mapToDouble(l -> ffact(l))

.boxed()

.collect(Collectors.toCollection(ArrayList::new));The simulated runs take 292 ms on average with parallel() commented out, and 210 ms on average with parallel() uncommented (the average of ten real single run of the parallel version was 344 ms).

Note that what we need to do to obtain our ArrayList<Double> is rather tedious. We need mapToDouble instead of simply map, and then we need boxed() before collect().

Compare with, say, Ruby

res = (0 .. MAXLOOP-1) .map {|x| factorial(x)}or Python

res = list(map(lambda x: factorial(x), range(0, MAXLOOP)))or

res = [factorial(x) for x in range(0, MAXLOOP)]And, of course, Haskell:

res = map factorial [0 .. kMAXLOOP - 1]From a syntactical point of view Java is terrible in this game5.

In Haskell

Let's see how it could be done in Haskell.

dfact :: Double -> Double

dfact 0.0 = 1.0

dfact 1.0 = 1.0

dfact n = n * dfact (n - 1.0)

kMAXLOOP = 10000

produceResult = map dfact [0 .. kMAXLOOP-1]We must tell the compiler that dfact works with doubles, otherwise it will use Integer which are arbitrary precision numbers — we can compute 10000! for real, in theory… Instead we want the same we did in Java: to waste time (but we haven't infinite time), and we don't care about the result, provided that it is feasible to emit any result.

Haskell is lazy: it won't do anything for real unless it needs to. So we must force it and this is done by deepseq:

import Control.DeepSeq

import System.CPUTime

-- ...

main = do

start <- getCPUTime

let r = produceResult

end <- r `deepseq` getCPUTime

putStrLn $ show $ fromIntegral (end - start) / (10^12)Compiled6 and executed it outputs on average 1.2458s7.

However note that nowhere we can say to do the mapping in parallel. I've tried with:

import Control.Parallel.Strategies

-- ...

produceResult = parMap rpar dfact [0 .. kMAXLOOP-1]added the option -threaded and run with +RTS -N. It took longer: 2.058s on average8! (It isn't an uncommon problem: see this forum.)

It's not the first time Haskell surprises me with performance like these. Maybe the compiler can be blamed, maybe other compilers can do better (I doubt it), maybe it shouldn't be the way you do such things, maybe among the benefits of Haskell there isn't performance.

Needless to say, if in a run you don't need all the values, Haskell takes advantage from its laziness.

main = do

start <- getCPUTime

let r = last produceResult

end <- r `deepseq` getCPUTime

putStrLn $ show $ fromIntegral (end - start) / (10^12)

putStrLn $ show rHere we take only the last value, and only this last value is computed for real. The output is something like

0.0

InfinityBut this is a trick, sort of. In other languages it would mean to write the code so that it computes whatever only when actually requested for, and the request only for the last value. Instead, we want to compute everything on the whole array.

In C++11

Let's write this wasteful computation in C++11.

The plain loop code is very similar to the Java version (using primitive type double). The test bed is something like this:

#include <chrono>

typedef std::chrono::system_clock Time;

//typedef std::chrono::high_resolution_clock Time;

typedef std::chrono::milliseconds ms;

// ...

int main()

{

// ...

auto start = Time::now();

// waste your time here

auto end = Time::now();

auto dur = std::chrono::duration_cast<ms>(end - start);

std::cout << dur.count() << "\n";

return 0;

}The average on ten runs9 was 101 ms, using all optimisation the compiler can provide (-O3). With a little bit of playing with optimising options and -S to look into the generated assembly code, it seems that the key factor for the 101 ms average is the inlining of the ffact() function. Compiling with -O3 -fno-inline-functions -fno-inline-small-functions, I obtain a 3.23s average.

A little bit better adding -fopenmp and #pragma omp parallel for before the for, but still 2.7s (average) with 2 threads.

It seems like the loop version of Java can beat C++, 200 vs 3000 ms! And anyway, with inline optimisation, C++ is only 2x faster, which isn't that much.

I've already seen a similar problem10, and it turns out I have to add -msse2 -mfpmath=sse to make the compiler use SSE (Java JIT compiler very likely exploits this). With these options added, I obtain an average of 125 ms.

If I remove the options to avoid inlining, I obtain an average of 88 ms.

Now, let's use vector:

std::vector<double> v(n, n + MAXLOOP);

std::vector<double> vd(MAXLOOP); // to avoid push_back

//...

for (i = 0; i < MAXLOOP; ++i) {

vd[i] = ffact(v[i]);

} The average of ten (or so) runs is 125 ms.

Let's do it in a functional way:

std::transform(v.begin(),

v.end(),

vd.begin(),

[](double x) { return ffact(x); });The average of ten runs is again 125 ms. Indeed there's no reason to use a vector: we can use the primitive double arrays:

std::transform(n,

n+MAXLOOP,

d,

[](double x) { return ffact(x); });The average doesn't diminish, it is still 125 ms (more or less). We can speculate that the extra cost is not related to the way it must access the data, but to something else, maybe an optimisation opportunity which is lost because of the vector in the loop version, and because of the transform in the “functional” version — so that using plain arrays doesn't give back the chance for that optimisation.

We can't try to parallelize transform, unless we use a parallelized version of it — but then we need GCC to support these features from C++17 (6.3.0 doesn't). Or we need to use GCC extensions for parallelism11:

#include <parallel/algorithm>

// ...

__gnu_parallel::transform(n,

n+MAXLOOP,

d,

[](double x) { return ffact(x); });The average (on 20 runs) is 65 ms. This performance doesn't get worse if we use vector again:

__gnu_parallel::transform(v.begin(),

v.end(),

vd.begin(),

[](double x) { return ffact(x); });It is still around that value (indeed, 68 ms, average on 20 runs — vs 344 ms of the parallel Java stream), and slightly faster than the loop version.

With the proper optimisation, C++ gives the performance we expect from it. Java anyway isn't that bad12, and it still runs better than compiled Haskell.

Fortran

Fortran hasn't functional style, but it could be interesting to see how it runs in this game.

The compiler (gfortran) is “smart” enough to see that it can compute all the values at compile time. And so the Fortran version is the fastest, of course. Compilation time, however, isn't bad13 and it accounts for the whole compilation process and the computation of the values:

$ time gfortran-6.3 -O3 -msse2 -mfpmath=sse el.f08

real 0m0.289s

user 0m0.260s

sys 0m0.016sAnyway, the test bed:

program El

use, intrinsic :: iso_fortran_env

implicit none

! ...

real :: start, finish

call cpu_time(start)

! ...

call cpu_time(finish)

print *, (finish - start) * 1000.0

contains

! ... fact...

end program ElThe function fact is simply:

elemental pure function fact(x) result(r)

real(kind=real64), intent(in) :: x

real(kind=real64) :: r

integer :: a

r = x

do a = 1, int(x)-1

r = r * real(a)

end do

end function factand you use it like this:

d = fact(n)where n is our input array and d the output array. They are declared (and eventually initialised) like this:

integer, parameter :: MAXLOOP = 10000

integer :: i

real(kind=real64), dimension(MAXLOOP) :: n = [ (i, i=1,MAXLOOP) ]

real(kind=real64), dimension(MAXLOOP) :: dBut, as I've said, there's a problem: everything's computed at compile-time. To avoid this, n isn't initialised anymore:

real(kind=real64), dimension(MAXLOOP) :: nbut filled by a subroutine (no more clues about the feasibility of the compile-time computation):

call fill_it(n)which is simply this:

subroutine fill_it(a)

implicit none

real(kind=real64), dimension(:), intent(out) :: a

integer :: i

do i = lbound(a,1), ubound(a,1)

a(i) = i

end do

end subroutine fill_itUnfortunately the compiler does another obvious optimisation: it removes unused things: we never use d, and so we don't need to evaluate the code which fills it. (Do not forget: we have a pure function, fact, so it knowns that there aren't side effects associated to the actual execution of the function).

So I had to add:

write (error_unit, *) d(170)just before the end14.

The average of several (10) runs is 124 ms, more than the C++ loop version with inlining (88 ms15). Fortran's syntax d = fact(n) can be thought analogical to a map/apply16, and it is also cleaner than an explicit loop. It could be parallelised, likely even behind the curtains, but gfortran doesn't. Also, converting the clean d = fact(n) into a regular do-loop in order to use OpenMP (!$omp parallel do), doesn't improve performance17. I haven't investigated why.

Conclusion

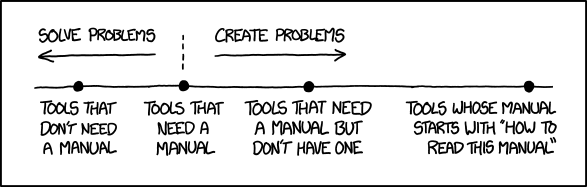

Java streams don't exist just to give a touch of functional style to your programs, or to write loops in a new fancy syntax, or because functional is cool now. They are tools, and like any other tool, they must be used in the right way for the purpose they were created for, or anyway when and if your program can benefit from them.

Pure functional languages have advantages when you need parallelization, because of the referential transparency; it's carved in their DNA.

Also, functional syntax can be terse and more expressive, but it isn't always so… and anyway this alone isn't a good reason for “going functional”, at least not in Java.

So, as I see it, Java streams should be used only when you need to grab parallelization opportunities — despite the fact that you have to “parallelize” the stream explicitly, as if it could worth using streams when parallelization isn't in your mind: maybe there are other scenarios where it makes sense, but I don't see any right now.

Java streams are monads (briefly — and likely not 100% correctly — a way to cope with side effects in a pure functional language), and Java's lambdas are “half closures”18 (and anyway you can call impure functions inside them), opening a big trapdoor over problems if all this is used naively with concurrency19.

A Java long should be a signed 64 bit, thus the biggest positive value it can hold is 9,223,372,036,854,775,807, while a double's exponent can represent numbers up to 10308 (of course the digits precision is very much smaller). See e.g. Double-precision floating-point format. The function

ffact()is supposed to compute the factorial of a number, and notoriously it grows rather fast. Using the Stirling's approximation I've estimated that with N > 88 even the double isn't enough — empirical tests have shown that I overestimated something, because the limit seems to be 170. However it doesn't matter because in my tests N is a lot bigger (10000); that is, all those computations won't give a meaningful value (the double should hold a special value which can be read as infinity). But they will waste time, anyway.↩Immutability altogether with no side effects allows several kinds of optimisation.↩

ArrayListisn't synchronised, but I think here we don't need to externally synchronise it.↩The array

nis loaded only once anddis cleared (d.clear()) in the external loop that simulates each run. The arraynisn't changed, so there's no need to reset its elements at each run. Though, if we do so, the average raises to 240 ms; of course bothd.clear()and the reset ofnare outside the internal loop and don't contribute to its duration. That is, whenndoesn't change, there must be an optimisation in some point. Maybe it's then.get(i)which becomes faster. It should be analysed further.↩Among Ruby, Python, and Haskell, only Haskell is strongly typed, even though you can't say from this example. All those languages haven't problem handling big numbers (arbitrary precision), which of course are more computationally expensive. So I suggest not to run these examples, unless you stick to doubles or integers (overflowed for most of the time, from

88170 — or so — on).↩With

-O2 -msse2because without these on my systemghcwon't use SSE instructions. Without it, it's about 6 times slower.↩The time is taken by

getCPUTimewhich gives the time in ps. I don't know if the “precision” can be trusted, but it is how it's done here, where anyway they suggest the use of the package criterion.↩It's better using the run-time option

-qm, “Don't automatically migrate threads between CPUs” (to use it, you need to compile with-rtsopts): 1.236s. Still slower than the non-parallel version, and still slower than Java! Usingrseqinstead ofrparmakes things worse (avg. 1.245s).↩From now on, when I talk about several runs, I always mean actual run: I collect the durations in a file and then I compute the average (using Octave, if

ris the file:load r,mean(r), that's all).↩The test idea used here is indeed inherited, so to speak, from an article on an Italian very nice blog, OK Panico. The article that raised the problem was this. My article analysing the “problem” is this. You have to know Italian to enjoy these contents. Anyway you can go through code snippets; even if silly, I'm rather glad that I had this idea of converting JVM code into C; see section Intermezzo: un'idea sciocca, where it is shown what I did. There's the source on my GitHub account, too.↩

See GCC manual on using parallel mode.↩

“Only” 5x slower than C++. Tried also to run the test with

java -XX:+AggressiveOpts, I haven't seen improvements.↩It's always GCC 6.3.0.↩

Written to standard error because this way I can redirect it to

/dev/nulland collect only the duration (to compute the average).↩But compiling with

-Ofastproduce a code which takes 52 ms on average (of 10 runs). Using-Ofastin the the C++ loop code doesn't make it faster (still 88 ms, more or less, on average).↩Indeed it's like an array programming language; but an operation like

mapwhich takes a function and applies it to each element of its second arguments makes such a “functional style” very array-programming like. E.g.f (1 2 3 4)vsmap f (1 2 3 4).↩Tried also with

forall. In this case gfortran compiles a regular loop, no parallelisation in sight — it seems it doesn't support it.↩They can close only over final or effectively final variables, i.e. over constants. That is, they close over values, not (actual) variables. This in fact reduces the dangers I alluded to in the text, unless you bypass this restriction using a reference: the reference doesn't change, so it is seen as “final”, but it can be a mutable instance of a class (a status), and there again problems with concurrency if you use all these features naively.↩

This is why here and there you can read about the need for external synchronisation when using unsynchronised classes like

ArrayList.↩

No comments:

Post a Comment